Google Supports Microformats, Rolls Out Rich Snippets

The annual Google Searchology conference held recently revealed some interesting offerings in the search marketing field. Google had introduced personalised search and universal search through the same event in previous years.

Google has stated its support for rich microformats. Yahoo’s Search Monkey has already implemented them. But is still not clear if the search engines read them. Local search results have been rumoured to use microformats but there is no evidence to back this either.

(more…)

Possible Related Posts

Posted by Ravi of Netconcepts Ltd. on 05/27/2009

Permalink | |  Print

| Trackback | Comments (0) | Comments RSS

Print

| Trackback | Comments (0) | Comments RSS

Filed under: General, Search Engine Optimization Google Profiles, Google Search Options, google searchology, hcalendar, hCard, microformats, RDF, rich snippets

Key to Relevance: Title Tags

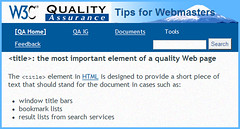

I recently penned an article at Search Engine Land on Leveraging Reverse Search For Local SEO. In it, I describe how in certain exception cases, one may benefit from adding the street address into a business site’s TITLE tag. It’s not the first time that I have mentioned how TITLE tags are key to relevance in Local Search — I’d previously mentioned how critical it is for local businesses to include their category keywords and city names in the TITLE as well.

Yet, a great many sites continue to miss this vital key to relevance, and they wonder why they fail at ranking for their most apropos keywords. Keywords for which they’d otherwise have a very good chance at ranking upon!

W3C calls the TITLE the “most important element of a quality web page” (more…)

Possible Related Posts

Posted by Chris of Silvery on 04/10/2009

Permalink | |  Print

| Trackback | Comments (0) | Comments RSS

Print

| Trackback | Comments (0) | Comments RSS

Filed under: Best Practices, Content Optimization, General, Google, HTML Optimization, Keyword Research, Local Search Optimization, Search Engine Optimization, SEO, Tricks key relevance, Keyword-Positions, Keyword-Rankings, page-titles, SEO, title-tags, w3c

Brief Posting Hiatus

As some of you likely noticed, I took a brief hiatus from posting here on Natural Search Blog. We had some technical difficulties here, and I changed jobs in the last few months.

However, the generous folx at Netconcepts have encouraged me to continue as one of the group blog writers herein, so you can continue to expect to see articles from me coming through this pipe!

Hope everyone had great holidays and here’s hopes for a Happy New Year (with increasing commerce, too)!

Possible Related Posts

Posted by Chris of Silvery on 01/14/2009

Permalink | |  Print

| Trackback | Comments (0) | Comments RSS

Print

| Trackback | Comments (0) | Comments RSS

Filed under: General

Cleaning Up the Retail Site Navigating Mess

In recent years, many retailers have implemented powerful attribute-based navigation systems that make it easier for customers to filter, sort, navigate, and buy from a retailer’s inventory. Most of our clientele who have implemented these technologies report good results, increased conversion and sales. From the natural search perspective, many such marketers are pleasantly surprised to find increases in the number of their pages indexed by Google upon implementing such systems. But in this case, what’s good for users, is actually not so good for search engines – or for your bottom line.

Last week, Google Webmaster Central took a position on this by pronouncing the “infinite filtering” and “resulting page duplication” produced by such guided navigation systems as negative for bots, and urged that the “blech” of duplicated pages be cleaned up, by saying:

… Another common scenario is websites which provide for filtering a set of search results in many ways. A shopping site might allow for finding clothing items by filtering on category, price, color, brand, style, etc. The number of possible combinations of filters can grow exponentially. This can produce thousands of URLs, all finding some subset of the items sold. This may be convenient for your users, but is not so helpful for the Googlebot, which just wants to find everything – once!

… One fix is to eliminate whole categories of dynamically generated links using your robots.txt file… Another option is to block those problematic links with a “nofollow” link attribute.

While useful for consumers, the attribute filters also produce unique URL parameters based on elements like product colors, sizes, price, popularity, the number of results per page, and the pagination construct itself, resulting in dozens or hundreds of permutations of unique crawlable URLs. But these unique URLs contain content that is completely similar and duplicated in nearly all respects. And duplicate content of this magnitude hurts your natural search marketing performance.

Here’s an example from the Artful Home brand: Compare this page to this page. Unique URL addresses, but same content. Click on the “price range” or “color” or “medium” attributes in the left navigation and you’ll get even more URLs with the same content. In fact Google has indexed 20 URL permutations containing the exact same content for this one page. Same title tags, headings, body copy, and more.

So let me give you 5 natural search marketing performance issues introduced by attribute-based retail navigation models (as typically implemented):

- You end up creating self-competition between your site’s own pages. (If you were Googlebot, which of these do you consider the authority page?)

- Your pages don’t resonate with searchers. (People seldom include attributes like price in their search queries)Â

- You bloat the engine index. (You don’t have as many pages indexed as you’re bragging about to your CEO.)

- You burn your “crawl equity.” (More of your unimportant pages get crawled with each visit a bot makes.)

- You fragment your available link popularity (PageRank) between so many different versions of the same/similar pages.

To deal with the situation, merchants with attribute-based navigation systems need more sophisticated strategies and execution capabilities.

For example, the on-page attributes that create the navigation scheme should be researched during the design phase to ensure your resulting landing page themes are consistent with searcher vocabulary. Filtering options that are not beneficial to your natural search performance should be similarly evaluated, and tactics put in place that make any “false” landing pages uncrawlable for bots. A combination of nofollows, meta noindexing and disallows strategies should be employed for this. This is easier said than done as most merchants require IT resources to modify the site architecture in this way.

(If you’re in this boat, consider a proxy site technology like GravityStream to make the generation and application of such rules-based changes, simple and automatic across a large scale site with no additional IT effort. Plus, GravityStream now gives you additional capability to automatically resolve the many parameter-filled URL permutations created by such navigation systems, into singular, authoritative versions of each category, subcategory, and product level URL (a form of “canonicalization” via intelligent redirection). By eliminating page duplication in this fashion, you maximize the distribution of your available link popularity to the greatest number of unique landing pages.)

Google’s position on this matter may seem to contradict their Webmaster Quality Guideline around “making your site for users not search engines.” After all, attribute-based navigation systems do offer many great user benefits. But they will unquestionably dilute your natural search marketing performance if not engineered with search engines in mind. Today’s savvy merchant is already implementing sophisticated strategies to capture the best of both worlds.

Brian

Possible Related Posts

Posted by Brian of Brian on 12/04/2008

Permalink | |  Print

| Trackback | Comments (0) | Comments RSS

Print

| Trackback | Comments (0) | Comments RSS

Filed under: General

Great Material For In-House SEOs

I’ll be giving a presentation at the upcoming Search Engine Strategies “SES” San Jose conference on “How to Speak Geek: Working Collaboratively With Your IT Department to Get Stuff Done“.

Search Engine Strategies Conference

This promises to be a great session, and I have some cool tips to impart on how to effectively communicate with one’s technology department staff in order to break down barriers, cut through red tape, and get changes deployed to bang up site traffic. (more…)

Possible Related Posts

Posted by Chris of Silvery on 08/05/2008

Permalink | |  Print

| Trackback | Comments (0) | Comments RSS

Print

| Trackback | Comments (0) | Comments RSS

Filed under: Conferences, General, Marketing, Seminars Conferences, Googledance, search-engine-strategies, ses

Amazon’s Secret to Dominating SERP Results

Many e-tailers have looked with envy at Amazon.com’s sheer omnipresence within the search results on Google. Search for any product ranging from new book titles, to new music releases, to home improvement products, to even products from their new grocery line, and you’ll find Amazon links garnering page 1 or 2 rankings on Google and other engines. Why does it seem like such an unfair advantage?

Can you keep a secret? There is an unfair advantage. Amazon is applying conditional 301 URL redirects through their massive affiliate marketing program.

Most online merchants outsource the management and administration of their affiliate program to a provider who tracks all affiliate activity, using special tracking URLs. These URLs typically break the link association between affiliate and merchant site pages. As a result, most natural search traffic comes from brand related keywords, as opposed to long tail keywords. Most merchants can only imagine the sudden natural search boost they’d get from their tens of thousands of existing affiliate sites deeply linking to their website pages with great anchor text. But not Amazon!

Amazon’s affiliate (“associate”) program is fully integrated into the website. So the URL that you get by clicking from Guy Kawasaki’s blog for example to buy one of his favorite books from Amazon doesn’t route you through a third party tracking URL, as would be the case with most merchant affilate programs. Instead, you’ll find it links to an Amazon.com URL (to be precise: http://www.amazon.com/exec/obidos/ASIN/0060521996/guykawasakico-20), with the notable associate’s name at the end of the URL so Guy can earn his commission.

However, refresh that page with your browser’s Googlebot User Agent detection turned on, and you’ll see what Googlebot (and others) get when they request that same URL: http://www.amazon.com/Innovators-Dilemma-Revolutionary-Business-Essentials/dp/0060521996 delivered via a 301 redirect script. That’s the same URL that shows up in Google when you search for this book title.

So if you are a human coming in from affiliate land, you get one URL used to track your referrer’s commission. If you are a bot visiting this URL, you are told these URLs now redirect to the keyword URLs. In this way, Amazon is able to have its cake and eat it too – provide an owned and operated affiliate management system while harvesting the PageRank from millions of deep affiliate backlinks to maximize their ranking visibility in your long tail search query.

(Note I’ve abstained from hyperlinking these URLs so bots crawling this content do not further entrench Amazon’s ranking on these URLs, although they are already #4 in the query above!).

So is this strategy ethical? Conditional redirects are a no-no because it sends mixed signals to the engine – is the URL permanently moved or not? If it is, but only for bots, then you are crossing the SEO line. But in Amazon’s case it appears searchers as well as general site users also get the keyword URL, so it is merely the affiliate users that get an “old” URL. If that’s the case across the board, it would be difficult to argue Amazon is abusing this concept, but rather have cleverly engineered a solution to a visibility problem that other merchants would replicate if they could. In fact, from a searcher perspective, were it not for Amazon, many long tail product queries consumers conduct would return zero recognizable retail brands to buy from, with all due respect to PriceGrabber, DealTime, BizRate, NexTag, and eBay.

As a result of this long tail strategy, I’d speculate that Amazon’s natural search keyword traffic distribution looks more like 40/60 brand to non-brand, rather than the typical 80/20 or 90/10 distribution curve most merchants (who lack affiliate search benefits) receive.

Possible Related Posts

Posted by Brian of Brian on 06/03/2008

Permalink | |  Print

| Trackback | Comments Off on Amazon’s Secret to Dominating SERP Results | Comments RSS

Print

| Trackback | Comments Off on Amazon’s Secret to Dominating SERP Results | Comments RSS

Filed under: General, Google, PageRank, Search Engine Optimization, SEO, Site Structure, Tracking and Reporting, URLs

Lawmakers Ask Charter Communications Not To Share Consumer Data With NebuAd

Two lawmakers have asked Charter Communications not to share data with NebuAd, a company that collects users’ web surfing information in order to enable advertisers to behaviorally target ad campaigns to them.

Two lawmakers have asked Charter Communications not to share data with NebuAd, a company that collects users’ web surfing information in order to enable advertisers to behaviorally target ad campaigns to them.

I previously wrote about NebuAd, and I highlighted that one major hiccup I saw with their business model was consumer sensitivity associated with private data.

It appears that NebuAd is facing the consumer resistance I earlier predicted.

Possible Related Posts

Posted by Chris of Silvery on 05/19/2008

Permalink | |  Print

| Trackback | Comments Off on Lawmakers Ask Charter Communications Not To Share Consumer Data With NebuAd | Comments RSS

Print

| Trackback | Comments Off on Lawmakers Ask Charter Communications Not To Share Consumer Data With NebuAd | Comments RSS

Filed under: General, Marketing, Security behavioral targeting, Nebu Ad, NebuAd, online privacy

Internet Retailers Finding Growth During The Recession

I earlier wrote about how businesses could take advantage of a recession by swooping in to grab up some marketshare from more fearful businesses who might choose to cut back advertising and expansion during an uncertain period. Now Forrester Research and Shop.org have released some survey results indicating that many online merchants are seeing growth while brick-and-mortar businesses are experiencing reduced sales.

The one cautionary note a Forrester analyst added to the release was that many retailers are apparently planning to advertise more in social networking sites like MySpace and Facebook, even though it’s “still unproven how such sites might build direct revenue for retailers” (paraphrased).

I’d note that many of us in internet marketing have identified fairly significant promotional potential in social media sites, and that some degrees of integration with them are possible in many cases without incurring advertising costs — so, it may be that judicious campaigns should still be attempted, even if there is not a lot of research evidence indicating good ROI. Just as with any promotional campaigns, it’s important to try to measure results as you go, and adjust as indicated.

Possible Related Posts

Posted by Chris of Silvery on 04/08/2008

Permalink | |  Print

| Trackback | Comments Off on Internet Retailers Finding Growth During The Recession | Comments RSS

Print

| Trackback | Comments Off on Internet Retailers Finding Growth During The Recession | Comments RSS

Filed under: General, Market Data, Social Media Optimization economy, internet retail, online retail, Recession

Corporate Blogging: Too Legally Dicey To Allow?

This article at CNET, “Corporate employee blogs: Lawsuits waiting to happen?“, caught my eye. Large corporations definitely feel nervous about allowing all their employees to have a public voice, but I think it’s now something that must be allowed, and good common-sense management can be used to help avoid some of the risk of lawsuits such as the one mentioned in the article involving Cisco.

Some companies’ legal departments think that blogging is just too risky to allow, and that it’s not worth the time and administrative headache to try to manage. The problem that I see with this is that it causes a company to be stuck in a Business 1.0 world of the past, disallowing the grass-roots-level public relations that employees can provide — blogging allows a big corporation to have a human face and can help explain and communicate what the company is up to. (more…)

Possible Related Posts

Posted by Chris of Silvery on 03/26/2008

Permalink | |  Print

| Trackback | Comments Off on Corporate Blogging: Too Legally Dicey To Allow? | Comments RSS

Print

| Trackback | Comments Off on Corporate Blogging: Too Legally Dicey To Allow? | Comments RSS

Filed under: Best Practices, General, News blogging, corporate blogging, employee blogs

Flickr As New YouTube Killer?

Michael Arrington at Techcrunch reports that video is coming soon to Flickr.

This is great news, and I think it could become an overnight major competitor to YouTube — having both types of media available via one site/service makes for a lot of convenience.

Though, people may not realize that it’s already been possible to a small degree, if you upload an animated GIF to Flickr (see this example where I uploaded an animated sequence of a glider’s movement from Conway’s Game of Life).

Possible Related Posts

Posted by Chris of Silvery on 03/17/2008

Permalink | |  Print

| Trackback | Comments Off on Flickr As New YouTube Killer? | Comments RSS

Print

| Trackback | Comments Off on Flickr As New YouTube Killer? | Comments RSS

Filed under: General, Image Optimization flickr, Yahoo, YouTube