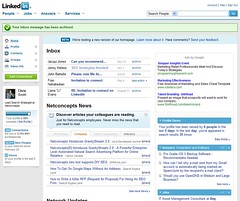

LinkedIn Beta Tests New Homepage Layout

So, I’m at the SMX West conference tonight, hopping around networking with people and every so often updating my Flickr pix or sending work emails when I noticed that LinkedIn is apparently beta-testing a new homepage layout on me. Check out the screengrab of what I see now when I login to LinkedIn:

So, they want feedback on their new design, so I’ll give it here. (more…)

Possible Related Posts

Posted by Chris of Silvery on 02/28/2008

Permalink | |  Print

| Trackback | Comments Off on LinkedIn Beta Tests New Homepage Layout | Comments RSS

Print

| Trackback | Comments Off on LinkedIn Beta Tests New Homepage Layout | Comments RSS

Filed under: Best Practices, Design, Social Media Optimization linkedin, Social-Media, website-design

Organic Search Marketing in 2008: Predictions

If you’re even the slightest bit aware of what’s been going on in organic search marketing, you couldn’t help but know that Google made a number of changes during 2007 which impacted the natural search marketing programs for many webmasters. So here’s my little post predicting where I see the trends pointing and what we can expect in 2008 and beyond… (more…)

Possible Related Posts

Posted by Chris of Silvery on 01/10/2008

Permalink | |  Print

| Trackback | Comments Off on Organic Search Marketing in 2008: Predictions | Comments RSS

Print

| Trackback | Comments Off on Organic Search Marketing in 2008: Predictions | Comments RSS

Filed under: Best Practices, Design, Google, Search Engine Optimization, SEO Google, Search Engine Optimization, SEO, Social-Media, usability, User-Centered-Design

Advice on Subdomains vs. Subdirectories for SEO

Matt Cutts recently revealed that Google is now treating subdomains much more like subdirectories of a domain — in the sense that they wish to limit how many results show up for a given keyword search from a single site. In the past, some search marketers attempted to use keyworded subdomains as a method for improving search referral traffic from search engines — deploying out many keyword subdomains for terms for which they hoped to rank well.

Not long ago, I wrote an article on how some local directory sites were using subdomains in an attempt to achieve good ranking results in search engines. In that article, I concluded that most of these sites were ranking well for other reasons not directly related to the presence of the keyword as a subdomain — I showed some examples of sites which ranked equally well or better in many cases where the keyword was a part of the URI as opposed to the subdomain. So, in Google, subdirectories were already functioning just as well as subdomains for the purposes of keyword rank optimization. (more…)

Possible Related Posts

Posted by Chris of Silvery on 12/12/2007

Permalink | |  Print

| Trackback | Comments Off on Advice on Subdomains vs. Subdirectories for SEO | Comments RSS

Print

| Trackback | Comments Off on Advice on Subdomains vs. Subdirectories for SEO | Comments RSS

Filed under: Best Practices, Content Optimization, Domain Names, Dynamic Sites, Google, Search Engine Optimization, SEO, Site Structure, URLs, Worst Practices Domain Names, Google, host crowding, language seo, Search Engine Optimization, SEO, seo subdirectories, subdomain seo, subdomains

Google’s Advice For Web 2.0 & AJAX Development

Yesterday, Google’s Webmaster Blog gave some great advice for Web 2.0 application developers in their post titled “A Spider’s View of Web 2.0“.

In that post, they recommend providing alternative navigation options on Ajaxified sites so that the Googlebot spider can index your site’s pages and also for users who may have certain dynamic functions disabled in their browsers. They also recommend designing sites with “Progressive Enhancement” — designing a site iteratively over time by beginning with the basics first. Start out with simple HTML linking navigation and then add on Javascript/Java/Flash/AJAX structures on top of that simple HTML structure.

Before the Google Webmaster team had posted those recommendations, I’d published a little article early this week on Search Engine Land on the subject of how Web 2.0 and Map Mashup Developers neglect SEO basics. A month back, my colleague Stephan Spencer also wrote an article on how Web 2.0 is often search-engine-unfriendly and how using Progressive Enhancement can help make Web 2.0 content findable in search engines like Google, Yahoo!, and Microsoft Live Search.

Way earlier than both of us even, our colleague, P.J. Fusco wrote an article for ClickZ on How Web 2.0 Affects SEO Strategy back in May.

We’re not just recycling each other’s work in all this — we’re each independently convinced of how problematic Web 2.o site design can limit a site’s performance traffic-wise. If your pages don’t get indexed by the search engines, there’s a far lower chance of users finding your site. With just a mild amount of additional care and work, Web 2.0 developers can optimize their applications, and the benefits are clear. Wouldn’t you like to make a little extra money every month on ad revenue? Better yet, how about if an investment firm or a Google or Yahoo were to offer you millions for your cool mashup concept?!?

But, don’t just listen to all the experts at Netconcepts — Google’s confirming what we’ve been preaching for some time now.

Possible Related Posts

Posted by Chris of Silvery on 11/07/2007

Permalink | |  Print

| Trackback | Comments Off on Google’s Advice For Web 2.0 & AJAX Development | Comments RSS

Print

| Trackback | Comments Off on Google’s Advice For Web 2.0 & AJAX Development | Comments RSS

Filed under: Best Practices, Google, Search Engine Optimization, SEO AJAX, Google, Mashups, Progressive-Enhancement, Search Engine Optimization, SEO, Web-2.0

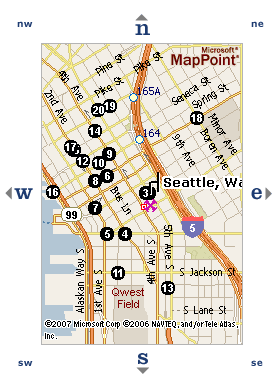

Dealer Locator & Store Locator Services Need to Optimize

My article on local SEO for store locators just published on Search Engine Land, and any company that has a store locator utility ought to read it. Many large companies provide a way for users to find their local stores, dealers, or authorized resellers. The problem is that these sections are usually hidden from the search engines behind search submission forms, javascripted links, html frames, and Flash interfaces.

My article on local SEO for store locators just published on Search Engine Land, and any company that has a store locator utility ought to read it. Many large companies provide a way for users to find their local stores, dealers, or authorized resellers. The problem is that these sections are usually hidden from the search engines behind search submission forms, javascripted links, html frames, and Flash interfaces.

For many national or regional chain stores, providing dealer-locator services with robust maps, driving directions and proximity search capability is outside of their core competencies, and they frequently choose to outsource that development work or purchase software to enable the service easily.

I did a quick survey and found a number of companies providing dealer locator or store finder functionality: (more…)

Possible Related Posts

Posted by Chris of Silvery on 09/13/2007

Permalink | |  Print

| Trackback | Comments Off on Dealer Locator & Store Locator Services Need to Optimize | Comments RSS

Print

| Trackback | Comments Off on Dealer Locator & Store Locator Services Need to Optimize | Comments RSS

Filed under: Best Practices, Content Optimization, Dynamic Sites, Local Search Optimization, Maps, Search Engine Optimization, SEO, Site Structure chain-stores, dealer-locators, Local Search Optimization, local-SEO, store-location-software, store-locators

Double Your Trouble: Google Highlights Duplication Issues

Maile Ohye posted a great piece on Google Webmaster Central on the effects of duplicate content as caused by common URL parameters. There is great information in that post, not least of which it validates exactly what a few of us have stated for a while: duplication should be addressed because it can water down your PageRank.

Maile suggests a few ways of addressing dupe content, and she also reveals a few details of Google’s workings that are interesting, including: (more…)

Possible Related Posts

Posted by Chris of Silvery on 09/12/2007

Permalink | |  Print

| Trackback | Comments Off on Double Your Trouble: Google Highlights Duplication Issues | Comments RSS

Print

| Trackback | Comments Off on Double Your Trouble: Google Highlights Duplication Issues | Comments RSS

Filed under: Best Practices, Dynamic Sites, Google, PageRank, Search Engine Optimization, SEO, Site Structure, URLs Canonicalization, duplicate-content, duplication, Google, Search Engine Optimization, SEO

Resurrection of the Meta Keywords Tag

Danny Sullivan did a great, comprehensive examination of current status of the Meta Keywords tag, and his testing showed that both Ask and Yahoo will still use content in that tag as a relevancy signal. Both Google and Microsoft Live do not. His clear outline of the history, common questions, and contemporary testing of the factor were really helpful.

However, I think there’s still a case where Google may be using the Meta Keywords tag… (more…)

Possible Related Posts

Posted by Chris of Silvery on 09/07/2007

Permalink | |  Print

| Trackback | Comments Off on Resurrection of the Meta Keywords Tag | Comments RSS

Print

| Trackback | Comments Off on Resurrection of the Meta Keywords Tag | Comments RSS

Filed under: Best Practices, Search Engine Optimization, SEO, Tricks, Worst Practices black-hat-seo, Google, Meta-Keywords-Tag, meta-tags, metatags

SES Session on Universal & Blended Vertical Search

I’m busy attending this year’s Search Engine Strategies Conference (SES) in San Jose, but I thought it’d be worthwhile to pause for half a minute in the flurry of sessions and networking to mention a couple of interesting things I heard from Google in yesterday’s session on Universal and Blended Vertical Search.

Possible Related Posts

Posted by Chris of Silvery on 08/21/2007

Permalink | |  Print

| Trackback | Comments Off on SES Session on Universal & Blended Vertical Search | Comments RSS

Print

| Trackback | Comments Off on SES Session on Universal & Blended Vertical Search | Comments RSS

Filed under: Best Practices, Google, Searching David-Bailey, Google, Google-Universal-Search, search-engine-strategies, SES-Conference, Universal-Search, Vertical-Search

Now MS Live Search & Yahoo! also treat Underscores as word delimiters

So, I earlier highlighted how Stephan reported on Matt Cutts revealing that Google treats underscores as white-space characters. Now Barry Schwartz has done a fantastic follow-up by asking each of the search engines if they also treated underscores just like dashes and other white space characters, and they’ve verified that they’re also handling them similarly. This is another incremental paradigm shift in search engine optimization!

I’ve previously opined that classic SEO may become extinct in favor of Usability, and announcements like this fluid handling of underscores would tend to support that premise. Google, Yahoo! and MS Live Search have been actively trying to reduce barriers to indexation and ranking abilities by changes like this plus improved handling of redirection, and myriad other changes which both obviate the need for technical optimizers and reduce the ability to artificially influence rankings through technical improvements.

I continue to think that the need for SEOs may decrease until they’re perhaps no longer necessary, so natural search marketing shops will likely evolve into site-building/design studios, copy writing teams, and usability research firms. The real question would be: how soon will it happen?

Possible Related Posts

Posted by Chris of Silvery on 08/02/2007

Permalink | |  Print

| Trackback | Comments Off on Now MS Live Search & Yahoo! also treat Underscores as word delimiters | Comments RSS

Print

| Trackback | Comments Off on Now MS Live Search & Yahoo! also treat Underscores as word delimiters | Comments RSS

Filed under: Best Practices, Google, Link Building, MSN Search, URLs, Yahoo

Build It Wrong & They Won’t Come: Coca-Cola’s Store

I just wrote an article comparing Coke’s and Pepsi’s homepage redirection, concluding that Pepsi actually does a better job, though both of them did ultimately nonoptimal setup for the purposes of search optimization. Clunky homepage redirection isn’t the only search marketing sin that Coca-Cola has done — their online product shopping catalog is very badly designed for SEO as well, and I’ll outline a number of reasons why.

In this article and in the redirection article, I’m criticising Coca-Cola’s technical design quite a bit, but I’m not trying to embarrass them — like any good American boy, I love Coca-Cola (particularly Coke Classic and Cherry Coke). In fact, this could ultimately benefit them, if they take my free assessment and use it as a guide for improving their site. I’m doing this because Coca-Cola is the top most-recognized brand worldwide, and the sorts of errors they’re making in their natural search channel are all too common in ecommerce sites. I chose Coca-Cola’s e-store because they make such a great example of the sorts of things that online marketers need to focus upon. If such a juggernaut of a company, with huge advertising and marketing budgets makes these sorts of mistakes, you could be making them, too.

Possible Related Posts

Posted by Chris of Silvery on 07/16/2007

Permalink | |  Print

| Trackback | Comments Off on Build It Wrong & They Won’t Come: Coca-Cola’s Store | Comments RSS

Print

| Trackback | Comments Off on Build It Wrong & They Won’t Come: Coca-Cola’s Store | Comments RSS

Filed under: Best Practices, brand names, Search Engine Optimization, SEO, Worst Practices Coca-Cola, Coke, Etail-Optimization, Online-Catalog-Optimization, Online-Store-Optimization, SEO