Retailers Recession Proofing Through Optimizing Internet Retail Sites

Overall economic fears are causing many retailers and other businesses to step up their games in terms of promotion. While some retailers are cutting back on advertising or paring down on their inventory, there are compelling reasons to increase the intensity in marketing efforts in order to offset the expected reduction in average customer spending. If your competitors are cutting back on efforts, not only could you have a chance to dominate in your sector, but you could even increase profits at the expense of your competition’s market share.

The internet is a prime area to focus in this period, since the net reduces distance barriers and the difficulty of locating products for buyers, and efforts to increase sales through this medium can be accomplished at lower costs than many other options. One of the most cost-effective areas for internet promotion is via increasing your “natural” traffic referred to your site from search engines.

Many internet retailers haven’t connected the dots sufficiently (more…)

Possible Related Posts

Posted by Chris of Silvery on 02/14/2008

Permalink | |  Print

| Trackback | Comments Off on Retailers Recession Proofing Through Optimizing Internet Retail Sites | Comments RSS

Print

| Trackback | Comments Off on Retailers Recession Proofing Through Optimizing Internet Retail Sites | Comments RSS

Filed under: Dynamic Sites, Marketing, Search Engine Optimization, SEO Ecommerce, GravityStream, Internet Retailers, Recession, Search Engine Optimization, SEO

Advice on Subdomains vs. Subdirectories for SEO

Matt Cutts recently revealed that Google is now treating subdomains much more like subdirectories of a domain — in the sense that they wish to limit how many results show up for a given keyword search from a single site. In the past, some search marketers attempted to use keyworded subdomains as a method for improving search referral traffic from search engines — deploying out many keyword subdomains for terms for which they hoped to rank well.

Not long ago, I wrote an article on how some local directory sites were using subdomains in an attempt to achieve good ranking results in search engines. In that article, I concluded that most of these sites were ranking well for other reasons not directly related to the presence of the keyword as a subdomain — I showed some examples of sites which ranked equally well or better in many cases where the keyword was a part of the URI as opposed to the subdomain. So, in Google, subdirectories were already functioning just as well as subdomains for the purposes of keyword rank optimization. (more…)

Possible Related Posts

Posted by Chris of Silvery on 12/12/2007

Permalink | |  Print

| Trackback | Comments Off on Advice on Subdomains vs. Subdirectories for SEO | Comments RSS

Print

| Trackback | Comments Off on Advice on Subdomains vs. Subdirectories for SEO | Comments RSS

Filed under: Best Practices, Content Optimization, Domain Names, Dynamic Sites, Google, Search Engine Optimization, SEO, Site Structure, URLs, Worst Practices Domain Names, Google, host crowding, language seo, Search Engine Optimization, SEO, seo subdirectories, subdomain seo, subdomains

Build Your Own Local Search Engine

Quite a few bloggers out there have clued-in to how using Eurekster’s Swickis on their blogs can be a cool feature enhancement, providing custom thematic search engines for their users. If you have a blog that focuses on particular subject matter, inclusion of useful links and other features like these custom search engines can help to build loyalty and return visits. But, for webmasters who build local guides for small communities, Swickis are also an ideal way to rapidly provide robust, location-specific search functionality.

Over time, I’ve looked at a lot of small community guides, and many of the people who create them are masters of finding free widgets to provide functionality for things like weather forecasts, news headlines, and local events. But, many of these sites are missing even simple search functionality to help users find the local info on their site as well as elsewhere on the internet. (more…)

Possible Related Posts

Posted by Chris of Silvery on 10/31/2007

Permalink | |  Print

| Trackback | Comments Off on Build Your Own Local Search Engine | Comments RSS

Print

| Trackback | Comments Off on Build Your Own Local Search Engine | Comments RSS

Filed under: Blog Optimization, Dynamic Sites, Local Search, Searching, Tools Custom-Search-Engines, Eurekster, search-engines, Search-Widgets, Swickis

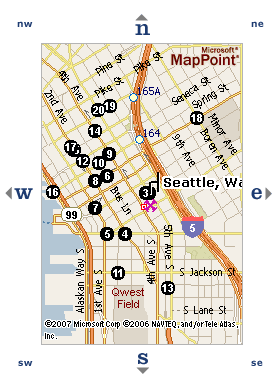

Dealer Locator & Store Locator Services Need to Optimize

My article on local SEO for store locators just published on Search Engine Land, and any company that has a store locator utility ought to read it. Many large companies provide a way for users to find their local stores, dealers, or authorized resellers. The problem is that these sections are usually hidden from the search engines behind search submission forms, javascripted links, html frames, and Flash interfaces.

My article on local SEO for store locators just published on Search Engine Land, and any company that has a store locator utility ought to read it. Many large companies provide a way for users to find their local stores, dealers, or authorized resellers. The problem is that these sections are usually hidden from the search engines behind search submission forms, javascripted links, html frames, and Flash interfaces.

For many national or regional chain stores, providing dealer-locator services with robust maps, driving directions and proximity search capability is outside of their core competencies, and they frequently choose to outsource that development work or purchase software to enable the service easily.

I did a quick survey and found a number of companies providing dealer locator or store finder functionality: (more…)

Possible Related Posts

Posted by Chris of Silvery on 09/13/2007

Permalink | |  Print

| Trackback | Comments Off on Dealer Locator & Store Locator Services Need to Optimize | Comments RSS

Print

| Trackback | Comments Off on Dealer Locator & Store Locator Services Need to Optimize | Comments RSS

Filed under: Best Practices, Content Optimization, Dynamic Sites, Local Search Optimization, Maps, Search Engine Optimization, SEO, Site Structure chain-stores, dealer-locators, Local Search Optimization, local-SEO, store-location-software, store-locators

Double Your Trouble: Google Highlights Duplication Issues

Maile Ohye posted a great piece on Google Webmaster Central on the effects of duplicate content as caused by common URL parameters. There is great information in that post, not least of which it validates exactly what a few of us have stated for a while: duplication should be addressed because it can water down your PageRank.

Maile suggests a few ways of addressing dupe content, and she also reveals a few details of Google’s workings that are interesting, including: (more…)

Possible Related Posts

Posted by Chris of Silvery on 09/12/2007

Permalink | |  Print

| Trackback | Comments Off on Double Your Trouble: Google Highlights Duplication Issues | Comments RSS

Print

| Trackback | Comments Off on Double Your Trouble: Google Highlights Duplication Issues | Comments RSS

Filed under: Best Practices, Dynamic Sites, Google, PageRank, Search Engine Optimization, SEO, Site Structure, URLs Canonicalization, duplicate-content, duplication, Google, Search Engine Optimization, SEO

Automatic Search Engine Optimization through GravityStream

I’ve had a lot of questions about my new work since I joined Netconcepts a little over three months ago as their Lead Strategist for their GravityStream product/service. My primary role is to bring SEO guidance to clients using GravityStream, and to provide thought leadership to the ongoing development of the product and business.

GravityStream is a technical solution that provides outsourced search optimization to large, dynamic websites. Automatic SEO, if you will. Here’s what it does…

Possible Related Posts

Posted by Chris of Silvery on 07/17/2007

Permalink | |  Print

| Trackback | Comments Off on Automatic Search Engine Optimization through GravityStream | Comments RSS

Print

| Trackback | Comments Off on Automatic Search Engine Optimization through GravityStream | Comments RSS

Filed under: Content Optimization, Dynamic Sites, HTML Optimization, Search Engine Optimization, SEO, Site Structure, Tools Automatic-Search-Engine-Optimization, GravityStream, Netconcepts, Outsourced-Search-Engine-Optimization, Search Engine Optimization, SEO

The Game of Life: New Chromatic Projection Method

I’ve been interested in The Game of Life ever since I heard about it back in the 70s/80s. It was some time around when my dad bought us our first personal computers. The Game of Life was invented by the mathematician, John Horton Conway, as he worked upon a way of modeling life-like behaviors within a simple field of rules. Conway’s Game of Life was popularized by Martin Gardner — the well-known writer of a popular science column in Scientific American.

Tons of hobbyists and computer programmers cut their eye-teeth by playing the Game of Life through programs copied out of magazines onto their PCs. I recall copying one of these programs out of a computer magazine into either our Timex-Sinclair 1000 or Commodore 64. I can’t recall whether it was Dr. Dobb’s or one of the myriad specialty Commodore zines that my dad was always buying.

Anyway, my aunt Amelia recently gave me a book for Christmas from my Amazon want list – it was New Constructions in Cellular Automata (Santa Fe Institute Studies in the Sciences of Complexity Proceedings)

— a few different papers all nicely bound up by the Santa Fe Institute. (I’m a big fan of quite a few theories regarding Complexity, Economics, Biology, etc which have come out of the Santa Fe Institute.) After looking over the papers from various researchers that have studied different aspects of Cellular Automata, I started thinking that it could be worthwhile to set up the Game of Life with some color/display elements which can help with predictive display of Life grouping evolution. I’ve written a little program that does this, so read on if you’re interested.

Glider Pattern animated

with color path projection

Possible Related Posts

Posted by Chris of Silvery on 02/14/2007

Permalink | |  Print

| Trackback | Comments Off on The Game of Life: New Chromatic Projection Method | Comments RSS

Print

| Trackback | Comments Off on The Game of Life: New Chromatic Projection Method | Comments RSS

Filed under: Dynamic Sites, General, technology cellular-automata, game-of-life, John-Conway, Martin-Gardner, The-Game-of-Life

Google Releases New Related Links Feature

Google Labs has just released a new feature called Google Related Links which allows webmasters to place a little tabbed user-interface navigation box on their site. The box will pull in links to sites related to the content on your webpage, allowing you to display related links to Searches, News, and Web Pages.

I’ve copied a screengrab below so that you can see how the real thing looks:

Perhaps this is a useful feature for some, but I’m thinking that most web editors prefer to choose their own related links and are better able to choose appropriate ones than this automated option.

So, are there other incentives to adding the code?

Their FAQ states that they do not pay publishers for adding the feature “at this time”. This would seem to hint that they’re considering paying for the referral traffic, which I think that most publishers would agree that they should.

A question: will Google bias the links supplied by this application towards searches which have better pay-for-performance ad revenue for themselves?

There’s something just a hair insidious seeming about this as well, however. On the PR face of it, Google represents that they want to help people out, make life easier, and enable people to find what they want to find on the web. But, Google is integrating itself more and more deeply into people’s websites, increasing dependency upon them. They provide search services for sites already, they’ve launched applications to allow people to design webpages through them, and they’re providing people with free web usage reporting and maps.

If there’s one thing that people have learned within the IT disaster recovery industry, placing too much dependency upon a single entity will create a single point of failure in a system. Admittedly, Google has nice infrastructure, but have you ever seen a company yet that never had a technical failure of some sort? What happens as increasing amounts of the internet itself is supported by Google, rather than by distributed systems?

In any case, it will be interesting to watch how many sites adopt this new feature without monetary incentives to do so.

Continue reading »Possible Related Posts

Posted by Chris of Silvery on 04/05/2006

Permalink | |  Print

| Trackback | Comments Off on Google Releases New Related Links Feature | Comments RSS

Print

| Trackback | Comments Off on Google Releases New Related Links Feature | Comments RSS

Filed under: Dynamic Sites, General, Google, Research and Development, Tools

Is your site unfriendly to search engine spiders like MSNBot?

Microsoft blogger Eytan Seidman on their MSN Search blog offers some very useful specifics on what makes a site crawler unfriendly, particularly to MSNBot:

An example of a page that might look “unfriendly” to a crawler is one that looks like this: http://www.somesite.com/info/default.aspx?view=22&tab=9&pcid=81-A4-76§ion=848&origin=msnsearch&cookie=false….URL’s with many (definitely more than 5) query parameters have a very low chance of ever being crawled….If we need to traverse through eight pages on your site before finding leaf pages that nobody but yourself points to, MSNBot might choose not to go that far. This is why many people recommend creating a site map and we would as well.

Possible Related Posts

Posted by stephan of stephan on 11/21/2004

Permalink | |  Print

| Trackback | Comments Off on Is your site unfriendly to search engine spiders like MSNBot? | Comments RSS

Print

| Trackback | Comments Off on Is your site unfriendly to search engine spiders like MSNBot? | Comments RSS

Filed under: Dynamic Sites, Spiders, URLs

Google Store makeover still not wooing the spiders

You may recall my observation a few months ago that the Google Store is not all that friendly to search engine spiders, including Googlebot. Now that the site has had a makeover, and the session IDs have been eliminated from the URLs, the many tens of thousands of duplicate pages have dropped to a mere 144. This is a good thing, since there’s only a small number of products for sale on the site. Unfortunately, a big chunk of those hundred-and-some search results lead to error pages. So even after a site rebuild, Google’s own store STILL isn’t spider friendly. And if you’re curious what the old site looked like, don’t bother checking the Wayback Machine for it. Unfortunately, the Wayback Machine’s bot has choked on the site since 2002, so all you’ll find for the past several years are “redirect errors”.

Possible Related Posts

Posted by stephan of stephan on 10/05/2004

Permalink | |  Print

| Trackback | Comments Off on Google Store makeover still not wooing the spiders | Comments RSS

Print

| Trackback | Comments Off on Google Store makeover still not wooing the spiders | Comments RSS

Filed under: Dynamic Sites, Google, Spiders