Google Testing New Local OneBox Layout & Addresses in PPC Ads

Greg Sterling this morning mentioned that Google is apparently experimenting with allowing full street addresses to appear below the URLs in PPC ads.

Even more interesting, this thread at Webmasterworld now reports seeing a new layout of local listings within Google SERPs — their screengrab shows the Google Maps onebox results on the right side of the page, apparently above the Sponsored Links ads.

Is this just usual UI/Usability testing, or could this segue into more unrest for the local SERPs?

Possible Related Posts

Posted by Chris of Silvery on 01/08/2008

Permalink | |  Print

| Trackback | Comments Off on Google Testing New Local OneBox Layout & Addresses in PPC Ads | Comments RSS

Print

| Trackback | Comments Off on Google Testing New Local OneBox Layout & Addresses in PPC Ads | Comments RSS

Filed under: Design, Google, Local Search Google, Google Local, Google-Maps, Local Search, SERPs

ReachLocal Becomes Authorized Google Adwords Reseller

ReachLocal announced today that they’ve formed a strategic alliance with Google to become an authorized Adwords reseller. Kevin Heisler at SEW reports that this will give them a leg up on competitors in local search who won’t benefit from the same status in the Google ecosystem.

ReachLocal also sells local ads into Yahoo!, MSN, Ask, AOL, and my old company, Superpages.com.

I was privileged to be given a tour of the ReachLocal offices here in Dallas back in September, (more…)

Possible Related Posts

Posted by Chris of Silvery on 01/08/2008

Permalink | |  Print

| Trackback | Comments Off on ReachLocal Becomes Authorized Google Adwords Reseller | Comments RSS

Print

| Trackback | Comments Off on ReachLocal Becomes Authorized Google Adwords Reseller | Comments RSS

Filed under: Google, Local Search, Monetization of Search, News, Yellow Pages Adwords, Google, Google Adwords, Local Search, online-advertising, reachlocal

Google Takes RSS & Atom Feeds out of Web Search Results

Google just announced this week that they have started reducing RSS & Atom feeds out of their search engine results pages (“SERPs”) – something that makes a lot of sense in terms of improving quality/usability in their results. (They also describe why they aren’t doing that for podcast feeds.)

This might confuse search marketers about the value of providing RSS feeds on one’s site for the purposes of natural search marketing. Here at Netconcepts, we’ve recommended using RSS for retail sites and blogs for quite some time, and we continue to do so. Webmasters often take syndicated feeds in order to provide helpful content and utilities on their sites, and so providing feeds can help you to gain external links pointing back to your site when webmasters display your feed content on their pages.

Google has removed RSS feed content from their regular SERPs, but they haven’t necessarily reduced any of the benefit of the links produced when those feeds are adopted and displayed on other sites. When RSS and Atom feeds are used by developers, they pull in the feed content and then typically redisplay it on their site pages in regular HTML formatting. When those pages link back to you as many feed-displayed pages do, the links transfer PageRank back to the site originating the feeds, and this results in building up ranking values.

So, don’t stop using RSS or Atom feeds!

Possible Related Posts

Posted by Chris of Silvery on 12/19/2007

Permalink | |  Print

| Trackback | Comments Off on Google Takes RSS & Atom Feeds out of Web Search Results | Comments RSS

Print

| Trackback | Comments Off on Google Takes RSS & Atom Feeds out of Web Search Results | Comments RSS

Filed under: Blog Optimization, Google, URLs ATOM, Feeds, Google, PageRank, RSS

Advice on Subdomains vs. Subdirectories for SEO

Matt Cutts recently revealed that Google is now treating subdomains much more like subdirectories of a domain — in the sense that they wish to limit how many results show up for a given keyword search from a single site. In the past, some search marketers attempted to use keyworded subdomains as a method for improving search referral traffic from search engines — deploying out many keyword subdomains for terms for which they hoped to rank well.

Not long ago, I wrote an article on how some local directory sites were using subdomains in an attempt to achieve good ranking results in search engines. In that article, I concluded that most of these sites were ranking well for other reasons not directly related to the presence of the keyword as a subdomain — I showed some examples of sites which ranked equally well or better in many cases where the keyword was a part of the URI as opposed to the subdomain. So, in Google, subdirectories were already functioning just as well as subdomains for the purposes of keyword rank optimization. (more…)

Possible Related Posts

Posted by Chris of Silvery on 12/12/2007

Permalink | |  Print

| Trackback | Comments Off on Advice on Subdomains vs. Subdirectories for SEO | Comments RSS

Print

| Trackback | Comments Off on Advice on Subdomains vs. Subdirectories for SEO | Comments RSS

Filed under: Best Practices, Content Optimization, Domain Names, Dynamic Sites, Google, Search Engine Optimization, SEO, Site Structure, URLs, Worst Practices Domain Names, Google, host crowding, language seo, Search Engine Optimization, SEO, seo subdirectories, subdomain seo, subdomains

Google Requests Help Fighting Malware

This last week, I whined a bit about Google results containing many links to malware sites, due to them making use of well-known black hat tactics. InternetNews.com is now reporting that Google is asking for assistance from the altruistic public on fighting the malware offenders. Google’s Security blog requests more assistance on fighting the bad guys, noting that they’ve improved in the past year, citing the warnings they pop up when users click on a link where they’ve detected possible malware.

Here’s one suggestion I have: (more…)

Possible Related Posts

Posted by Chris of Silvery on 12/02/2007

Permalink | |  Print

| Trackback | Comments Off on Google Requests Help Fighting Malware | Comments RSS

Print

| Trackback | Comments Off on Google Requests Help Fighting Malware | Comments RSS

Filed under: Google, Security black hat, black-hat-seo, Google, Malware, online security

Recent Google Improvements Fail To Halt Massive Malware Attack

Various news sites are reporting that a malware attack was deployed in the last couple of days, apparently based entirely upon black hat SEO tactics.

Software security company Sunbelt blogged about how the attack was generated: a network of spambots apparently added links into blog comments and forums pointing to the bad sites over a period of months in some cases, enabling those sites to achieve fair rankings in search engine result pages for a great many potential keyword search combinations. The pages either contained iframes which attempted to load malware onto visitors machines or perhaps they began redirecting to the sites containing malware at some point after achieving rankings. Sunbelt provided interesting screenshots of the SERPs in Google:

And also showed some screenshots of some of the keyword-stuffed pages which apparently got indexed:

I think it’s not at all a coincidence (more…)

Possible Related Posts

Posted by Chris of Silvery on 11/28/2007

Permalink | |  Print

| Trackback | Comments Off on Recent Google Improvements Fail To Halt Massive Malware Attack | Comments RSS

Print

| Trackback | Comments Off on Recent Google Improvements Fail To Halt Massive Malware Attack | Comments RSS

Filed under: General, Google, News, Tricks, Worst Practices black-hat-seo, blackhat-seo, Google, Malware, spam, Sunbelt

Google Hiding Content Behind an Image on their SERPs

Tamar Weinberg at Search Engine Roundtable reports that in a Google Groups forum, a Webmaster Central team member stated that you could use something like the z-index attribute in DHTML styles to hide text or links behind an image, so long as the text/link being hidden is what’s represented in the image.

I think it’s a good thing that they do allow this sort of use, because it appears to me that they’re doing this very thing on their own search results pages! If you refresh a search page, you can see what they’re hiding under their own logo:

…a text link pointing to their homepage.

Now, the interesting question I’d have for the Google team about this would be: this is straightforward if the image itself contains text, but what would be allowable if the image doesn’t contain text, but say, an image of a lion? There’s many different ways to express what that lion is from “lion” to “tawny, golden-furred lion king”.

Or, should we be assuming that images that are written over text and links are only allowable when the image contains text?

The Google Webmaster Tools contributor states that you could be using image’s ALT and TITLE attributes to essentially do the same thing. This is sorta funny, because one could say the same thing of Google’s use of this on their own page — why are they doing it?

One immediately wonders how Google polices this, since they’re apparently not frowning upon pages drawing images over text/links in all cases. They can detect text written over images, but would they have every instance checked by a human? Or, are they using optical character recognition algos to automatically check the text within images against the text being hidden?

In any case, the fact that Google is doing this on their own site could be taken as more confirmation that they don’t consider the technique to be bad in of itself — as long as the practice is conservative and the text/link just describes the text content within the image.

Possible Related Posts

Posted by Chris of Silvery on 11/19/2007

Permalink | |  Print

| Trackback | Comments Off on Google Hiding Content Behind an Image on their SERPs | Comments RSS

Print

| Trackback | Comments Off on Google Hiding Content Behind an Image on their SERPs | Comments RSS

Filed under: Google, Image Optimization, Search Engine Optimization, SEO Google, Search Engine Optimization, SEO, z-index, zindex

Google Maps Now Sports Special Logo Treatment

I noticed that Google Maps started using a special logo treatment this morning:

The logo is apparently promoting Geography Awareness Week 2007.

Google has done various logos promoting holidays and other special events on the main Google logo for quite a number of years, but I believe this is one of the first ever done on one of their vertical search properties. Unfortunately, the logo doesn’t link to the special event page, and it looks a lot like a roasted turkey — the smallness of it makes it hard to read visually, so I’m betting a lot of Google Maps users won’t have a clue as to what this is signifying.

Update: the Google Lat Long blog mentions their support of Geography Awareness Week.

Possible Related Posts

Posted by Chris of Silvery on 11/13/2007

Permalink | |  Print

| Trackback | Comments Off on Google Maps Now Sports Special Logo Treatment | Comments RSS

Print

| Trackback | Comments Off on Google Maps Now Sports Special Logo Treatment | Comments RSS

Filed under: Google, Local Search, Maps, Marketing Google, Google-Maps, Logos

Google’s Advice For Web 2.0 & AJAX Development

Yesterday, Google’s Webmaster Blog gave some great advice for Web 2.0 application developers in their post titled “A Spider’s View of Web 2.0“.

In that post, they recommend providing alternative navigation options on Ajaxified sites so that the Googlebot spider can index your site’s pages and also for users who may have certain dynamic functions disabled in their browsers. They also recommend designing sites with “Progressive Enhancement” — designing a site iteratively over time by beginning with the basics first. Start out with simple HTML linking navigation and then add on Javascript/Java/Flash/AJAX structures on top of that simple HTML structure.

Before the Google Webmaster team had posted those recommendations, I’d published a little article early this week on Search Engine Land on the subject of how Web 2.0 and Map Mashup Developers neglect SEO basics. A month back, my colleague Stephan Spencer also wrote an article on how Web 2.0 is often search-engine-unfriendly and how using Progressive Enhancement can help make Web 2.0 content findable in search engines like Google, Yahoo!, and Microsoft Live Search.

Way earlier than both of us even, our colleague, P.J. Fusco wrote an article for ClickZ on How Web 2.0 Affects SEO Strategy back in May.

We’re not just recycling each other’s work in all this — we’re each independently convinced of how problematic Web 2.o site design can limit a site’s performance traffic-wise. If your pages don’t get indexed by the search engines, there’s a far lower chance of users finding your site. With just a mild amount of additional care and work, Web 2.0 developers can optimize their applications, and the benefits are clear. Wouldn’t you like to make a little extra money every month on ad revenue? Better yet, how about if an investment firm or a Google or Yahoo were to offer you millions for your cool mashup concept?!?

But, don’t just listen to all the experts at Netconcepts — Google’s confirming what we’ve been preaching for some time now.

Possible Related Posts

Posted by Chris of Silvery on 11/07/2007

Permalink | |  Print

| Trackback | Comments Off on Google’s Advice For Web 2.0 & AJAX Development | Comments RSS

Print

| Trackback | Comments Off on Google’s Advice For Web 2.0 & AJAX Development | Comments RSS

Filed under: Best Practices, Google, Search Engine Optimization, SEO AJAX, Google, Mashups, Progressive-Enhancement, Search Engine Optimization, SEO, Web-2.0

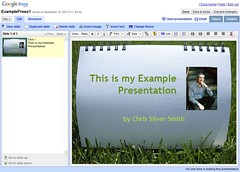

Google Docs Releases Presentations

Last night, Google announced the release of their presentation software as another feature in Google Docs. This follows close on the heals of a number of us posting about how the Google presentation software was soon to be released. The interface is really easy to use, and intuitive – very fluid. Here’s a screengrab of the example slide I made within just seconds of going in:

The presentation software is extremely simplistic, though, so there are not a lot of bells and whistles. The slides are completely static, so there’s no option for adding in transition effects nor animations. (more…)

Possible Related Posts

Posted by Chris of Silvery on 09/18/2007

Permalink | |  Print

| Trackback | Comments Off on Google Docs Releases Presentations | Comments RSS

Print

| Trackback | Comments Off on Google Docs Releases Presentations | Comments RSS

Filed under: Google, Tools Google, Google-Apps, Google-Docs, Google-Presentations, Presentation-Software, Presently